Wan AI Model Quick View

Wan 2.6 — Narrative & Consistency

The latest SOTA model designed for complex storytelling. Features multi-shot coherence, character reference (ID keep), and audio synchronization.

- Up to 15-second generation

- Multi-shot Storytelling

- Character Roleplay (Ref-to-Video)

Wan 2.5 — Everyday Creation

Reliable performance for standard text-to-video tasks. Best for quick drafts, efficient day-to-day content, and auto-dubbing workflows.

- Fast iteration & drafts

- Standard Auto-Dubbing

- High volume production

Wan 2.2 — Rapid Prototyping

The lightweight foundation model. 50% faster than newer versions, ideal for silent video tests and low-latency prototyping.

- Ultra-fast Inference

- Silent Generation

- Low VRAM Usage

Unleash Limitless Creativity: What Can You Build with Wan AI?

From cinematic trailers to e-commerce assets, transform your ideas into high-fidelity 1080p videos.

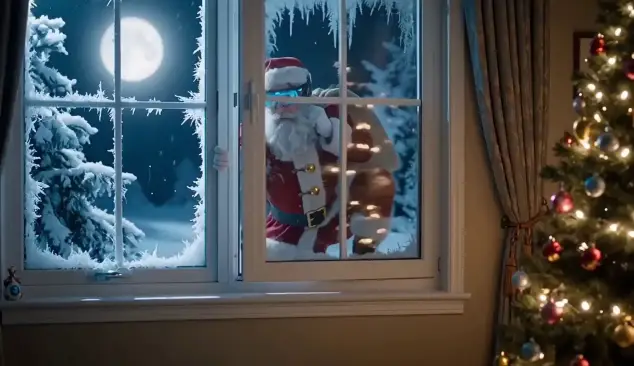

Cinematic Storytelling

Craft hyper-realistic sequences featuring complex camera maneuvers—such as pans, zooms, and dollys—paired with cinematic lighting. Wan AI excels at rendering dynamic light shifts and accurate physics, making it the perfect tool for creating Sci-Fi, thriller, or documentary-style trailers.

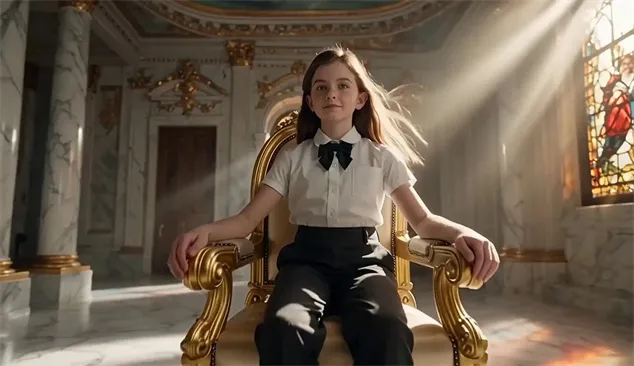

Character Performance

A Wan 2.6 Flagship FeatureSimply upload a single portrait, and watch Wan AI bring the character to life—speaking, walking, or acting. The model maintains strict consistency across facial features and attire, ensuring the character's identity remains intact throughout the performance.

Commercial & Product Visualization

Transform static product shots into dynamic video assets. Eliminate the need for expensive production crews by generating smooth product rotations, liquid physics, or virtual model showcases. Create high-conversion content that is ready-to-publish for TikTok and Instagram ads.

Stylized Anime & Art

Support a vast spectrum of artistic styles, ranging from traditional ink wash to modern 3D animation. This is an ideal tool for indie game developers looking to generate immersive cutscenes or animated concept art efficiently.

The Wan 2.6 Advantage: Redefining AI Video Generation

Why the latest 2.6 architecture is the superior choice for professional creators and studios.

Native Audio-Visual Synthesis (True Sync)

One of the few models with native audio generation. It generates not just visuals, but perfectly synchronized sound effects (SFX) and background music that match the motion.

Reference-to-Video (Identity Consistency)

Powered by advanced ID-retention technology. With just one reference image, the model "memorizes" the subject, delivering consistent character identity across different angles and actions—the key to long-form narratives.

Extended 15s Narrative Generation

Supports generating up to 15 seconds of continuous, coherent video in a single shot. This allows for complex actions and complete story beats without the need for constant stitching or morphing.

See What's Possible: Made with Wan 2.6

Explore a gallery of unedited, raw footage generated directly from Wan 2.6. Experience the breakthrough in 15s coherence, native audio, and character consistency.

Want to create videos like these?

Try Wan AI NowFrequently Asked Questions

Wan 2.6 represents a significant architectural leap over the legacy 2.5 version. While Wan 2.5 is excellent for short, silent clips, Wan 2.6 introduces 15-second long-form generation, native audio-visual synthesis (sound effects & music), and advanced Reference-to-Video capabilities for maintaining character consistency across shots.

To run Wan 2.6 efficiently using local tools like ComfyUI, we recommend an NVIDIA GPU with at least 16GB of VRAM (e.g., RTX 4080, 4090, or professional grade cards). For users with 8GB-12GB VRAM, we suggest utilizing the Wan 2.5 model or accessing Wan 2.6 via our Cloud Playground to prevent out-of-memory errors.

The licensing depends on the specific version. Wan 2.5 is open-source under the Apache 2.0 license, allowing for free commercial adaptation and usage. However, Wan 2.6 is a proprietary model. Commercial use of Wan 2.6 typically requires a commercial license or subscription through the official cloud API service. Always verify the specific license file included with your download.

No, you cannot simply swap the model weights. Wan 2.6 utilizes a different architecture and requires updated custom nodes and specific JSON workflows. We provide dedicated Wan 2.6 workflows and compatible Text Encoders (T5/CLIP) in our resources section to ensure a smooth setup.

Wan AI distinguishes itself through control and accessibility. While Sora and Runway are closed ecosystems, the Wan series (specifically Wan 2.5) offers local deployment options for maximum data privacy. The new Wan 2.6 matches top-tier proprietary models in visual fidelity (1080p, 15s duration) while offering unique features like single-image character roleplay.

Yes. Wan 2.6 features native audio synthesis. It does not just add random music; it analyzes the visual motion to generate synchronized sound effects (SFX) and background ambience that matches the video's context, eliminating the need for external post-production audio tools.